Overview

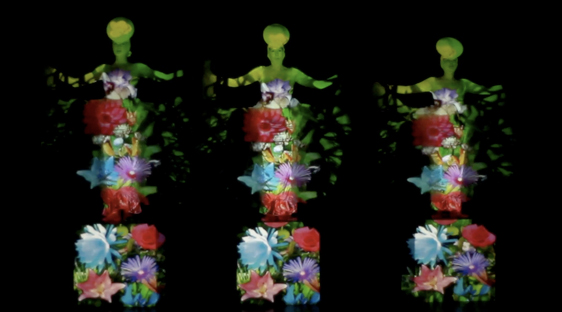

It has been some time since the experimental performance MikuMorphia and the dubious delights of being transformed into a female Japanese anime character. Since then I have cogitated and ruminated on following up the experiment with new work as well as reading up on texts by Sigmund Freud and Ernst Jentsch on the nature of the uncanny, with the view of writing a positional statement on how these ideas relate to my investigations in performance and technology.

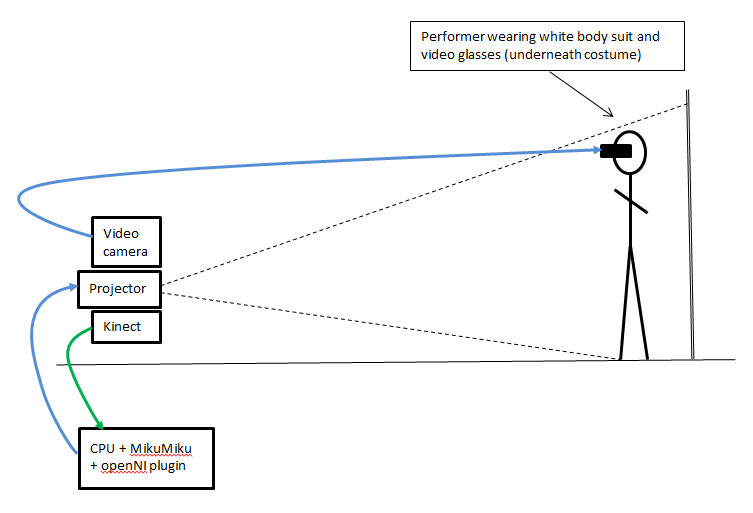

In January I moved into a bay in the Mixed Reality Lab and began to develop a more user friendly version of the original experimental performance whereby it would be possible for other people to easily experience the transformation and its subsequent sense of uncanniness without having to don a white skin tight lycra suit. Additionally I wanted to move away from the loaded and restrictive designs of the MikuMiku prefab anime characters. I investigated importing other anime characters and ran a few tests that included the projection of backdrops, but these experiments did not result in breaking any new ground. Further, the MikuMiku software was closed and did not allow the possibilities of getting under the hood to alter the dynamics and interactive capabilities of the software.

MikuMorpha as spectator

Rather than abandoning the MikuMiku experience altogether I carried out some basic “user testing” with a few willing volunteers in the MR lab. Rather than having to undress and squeeze into a tight lycra body suit, participants don a white boiler suit over their normal clothes, This does not produce an ideal body surface for projection being a rather baggy outfit with creases and folds, but enables people to easily try out the experience.

Observing participants trying out the MikuMiku transformation as a spectator rather than a performer made clear to me that watching the illusion and the behaviour of a participant is a very different experience from being immersed in it as a performer.

The subjective experience of seeing one self as other is completely different from objectively watching a participant – the sense of the uncanny as a spectator appears to be lost.

Rachel Jacobs, an artist and performer likened the experience to having the performers internal vision of their performance character visually made explicit, rather than internalised and visualised “in the minds eye”. The concept of the performers character visualisation being made explicit through the visual feedback of the projected image is one that deserves further investigation with other performers who are experienced in the concept of character visualisation.

Video of Rachel experiencing the MikuMiku effect:

Unity 3D

My first choice of an alternative to MikuMiku is the games engine Unity 3D which enables bespoke coding, has plugins for the Kinect and an asset store enabling characters, demos and scripts to be downloaded and modded. In addition the Unity Community with its forums and experts provide a platform for problem solving and include examples of a wide range of experimental work using the Kinect.

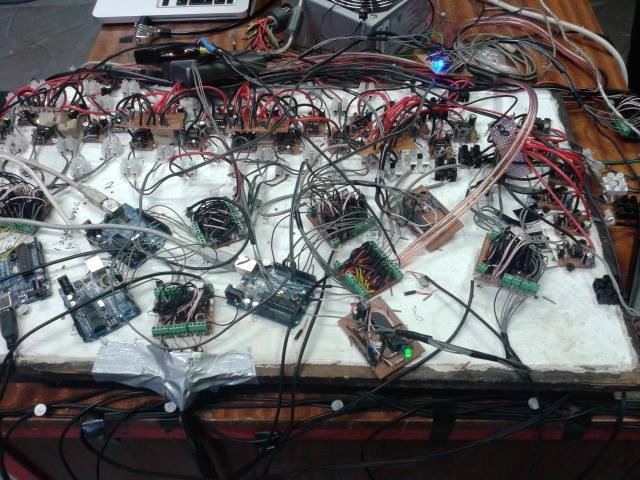

Over the last few days, with support from fellow MRL PhD student Dimitrios, I experimented with various Kinetic interfaces and drivers of differing and incompatible versions. The original drivers that enabled MikuMiku to work with the Kinect used old version of OpenNI (1.0.0.0) and Nite, with special non-Microsoft Kinect drivers. The Unity examples used later versions of drivers and OpenNI that were incompatible with MikuMiku which meant that I had to abandon running MikuMiku on the one machine. I managed to get a Unity demo running using OpenNI2.0, but in this version the T-pose which I used to calibrate the figure and the projection was no longer supported, calibration was automatic as soon as you entered the performance space, resulting in the projected figure not being co-located on the body.

Technical issues are tedious, frustrating, time consuming and an unavoidable element of using technology as a creative medium.

Yesterday, I produced a number of new tests using Unity and the Microsoft Kinect SDK, which offers options in Unity to control the calibration, automatic or activated by a selecting a specific pose.

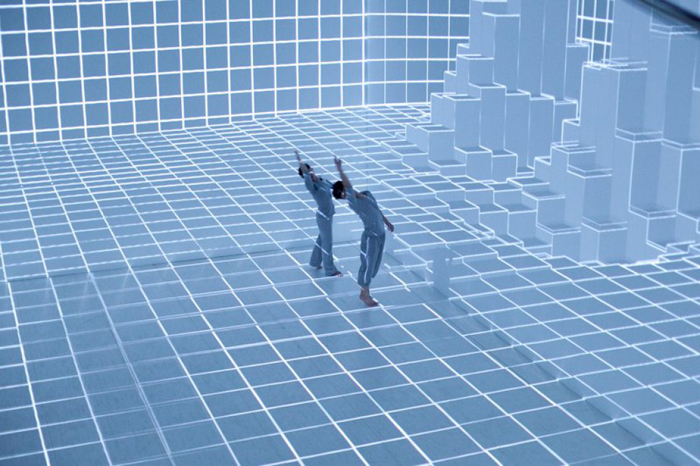

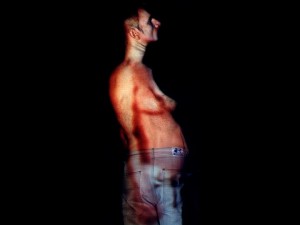

Below are three examples of these experiments, illustrating the somewhat more realistic human like avatars as opposed to the cartoon anime figures of MikuMiku.:

Male Avatar:

Female Avatar:

Male Avatar, performer without head mask:

This last video exhibits a touch of the uncanny where the human face of the performer alternatively blends and dislocates with the face of the projected avatar, the human and the artificial other being simultaneously juxtaposed.

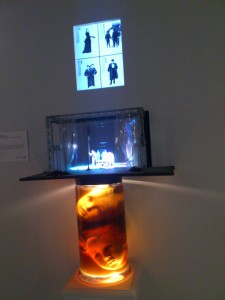

The sculpture in its religious setting, accompanied by dark ominous soundscapes, appeared as a biological receiver or transmitter of the esoteric, the setting worked beautifully and the work created a strange sense of suspended magic and purpose.

The sculpture in its religious setting, accompanied by dark ominous soundscapes, appeared as a biological receiver or transmitter of the esoteric, the setting worked beautifully and the work created a strange sense of suspended magic and purpose.